|

|

|

[Sponsors] | |||||

|

|

|

#541 |

|

New Member

Daniel

Join Date: Jun 2010

Posts: 14

Rep Power: 16  |

Glad to contribute!

Last-level cache memory size does seem to matter, as it should be key in keeping core clusters properly fed in sparse matrix math. Itís interesting to note that 3 cores of that notebookís cpu were enough to go by those 128MB of L4 cache at near full bus speed - around 42MB per cpu is still huge. Even going to 4 cores reduced available last-level cache to ~32MB per core, still gargantuan. As flotus1 mentioned, Iím convinced that notebook cpu could not sustain 4 cores at full speed of 3200 MHz - less per cycle ops, less data processed.. Still, itís got limits. If Iím not mistaken, the maximum bus speed for that CPU is 25.6 GB/s (200x the size of the last level cache memory per sec  ) and for sparse matrix math, that as far as that cpu could go. Quite good for 2013 when it was launched.. ) and for sparse matrix math, that as far as that cpu could go. Quite good for 2013 when it was launched..

|

|

|

|

|

|

|

|

|

#542 |

|

New Member

Join Date: Nov 2016

Posts: 15

Rep Power: 10  |

I have just bought the used 2 x Xeon E5-2678 v3 + X99 dual Jingsha + 8x8GB 2400 MHz on Aliepxress with around 620USD. After doing some benchmark I realized that E5-2678 v3 does not support 2400Mhz DDR4, so I changed the CPU to 2 x E5-2680 v4. Those two used CPU (2678v3 and 2680v4) are at same price now (around <100 USD) and even 2680v4 is slightly cheaper.

Here are some benchmark result Case 1. 2 x Xeon E5-2678 v3 + X99 dual Jingsha + 8x8GB 2400 MHz; Hyper threading OFF Centos 8.5, Opean Foam 8 on docker: # cores Wall time (s) 16 93.02 20 83.81 24 80.22 Case 2. 2 x Xeon E5-2678 v3 + X99 dual Jingsha + 8x8GB 2400 MHz; Hyper threading OFF Linux Mint 20.3, Open Foam 9; HT OFF # cores Wall time (s) 16 87.9 20 80.89 24 77.37 HT ON # cores Wall time (s) 16 90.92 20 81.59 24 80.66 Case 3. 2 x Xeon E5-2680 v4 + X99 dual Jingsha + 8x8GB 2400 MHz; Hyper threading OFF Linux Mint 20.3, Open Foam 9; HT OFF # cores Wall time (s) 16 81.64 20 74.62 24 70.79 28 68.11 So with limited budget, I think 2 x Xeon E5-2680 v4 + X99 dual + 8x8GB 2400 MHz is a good choice |

|

|

|

|

|

|

|

|

#543 |

|

Senior Member

Will Kernkamp

Join Date: Jun 2014

Posts: 371

Rep Power: 14  |

I have been eyeing these cheap E5-2680 v4's. Your results confirm performance. I think I will get myself a pair, because I have a machine that has the DDR4-2400 already, but v3 cpu.

|

|

|

|

|

|

|

|

|

#544 |

|

New Member

DS

Join Date: Jan 2022

Posts: 15

Rep Power: 4  |

Cisco C460 M4 4xE7-8880 v3, 32 x 16GB =512Gb (2R RAM), HT Off.

(bench_template_v02.zip) Code:

Prepare case run_32... Running surfaceFeatures on /home/ds/OpenFOAM/bench_template_v02/run_32 Running blockMesh on /home/ds/OpenFOAM/bench_template_v02/run_32 Running decomposePar on /home/ds/OpenFOAM/bench_template_v02/run_32 Running snappyHexMesh in parallel on /home/ds/OpenFOAM/bench_template_v02/run_32 using 32 processes real 3m47,032s user 117m24,307s sys 0m53,153s Prepare case run_64... Running surfaceFeatures on /home/ds/OpenFOAM/bench_template_v02/run_64 Running blockMesh on /home/ds/OpenFOAM/bench_template_v02/run_64 Running decomposePar on /home/ds/OpenFOAM/bench_template_v02/run_64 Running snappyHexMesh in parallel on /home/ds/OpenFOAM/bench_template_v02/run_64 using 64 processes real 2m29,357s user 151m42,590s sys 1m15,332s Prepare case run_72... Running surfaceFeatures on /home/ds/OpenFOAM/bench_template_v02/run_72 Running blockMesh on /home/ds/OpenFOAM/bench_template_v02/run_72 Running decomposePar on /home/ds/OpenFOAM/bench_template_v02/run_72 Running snappyHexMesh in parallel on /home/ds/OpenFOAM/bench_template_v02/run_72 using 72 processes real 2m42,417s user 186m2,877s sys 1m44,770s Run for 32... Run for 64... Run for 72... # cores Wall time (s): ------------------------ 32 37.1065 64 25.3496 72 24.9712 |

|

|

|

|

|

|

|

|

#545 |

|

Senior Member

Join Date: Jun 2011

Posts: 208

Rep Power: 16  |

An Australian guy below did a comprehensive and well explained study + tests on the subject

https://apps.dtic.mil/sti/pdfs/ADA612337.pdf |

|

|

|

|

|

|

|

|

#546 |

|

Super Moderator

Alex

Join Date: Jun 2012

Location: Germany

Posts: 3,427

Rep Power: 49   |

An interesting read for sure. But some of the discussions fall a little short imo.

They observe a 67% performance increase going from RAM with 1333MT/s to 1600MT/s. Which is way more than could be explained by the increased transfer rate alone. This is never addressed. Reading this section, I almost get the feeling the report tries to obfuscate this data point. Which is really surprising. The premise was to optimize performance. And here we have 46% performance increase of unknown origin, which is never investigated properly. |

|

|

|

|

|

|

|

|

#547 |

|

Senior Member

Join Date: Jun 2011

Posts: 208

Rep Power: 16  |

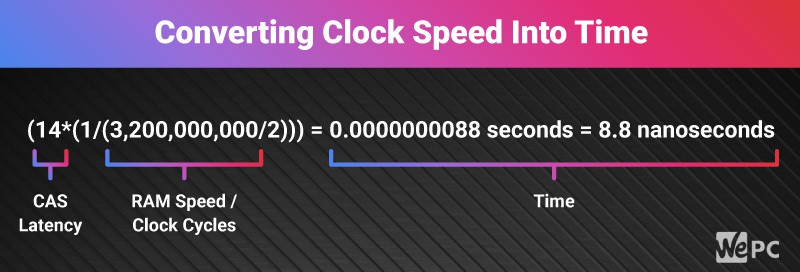

CAS latency also plays an important role in determinimg the exact RAM speed:

so that might explain the disproportionality. |

|

|

|

|

|

|

|

|

#548 |

|

Super Moderator

Alex

Join Date: Jun 2012

Location: Germany

Posts: 3,427

Rep Power: 49   |

There certainly is no shortage of possible explanations for their finding. Yet with the information given in the report, it seems impossible to come to a satisfactory conclusion.

I don't think memory latency can explain much of the difference. DDR3 Memory at JEDEC standards has pretty much constant access times across the board. Some of the diference could be LRDIMM vs RDIMM, but that brings us into guessing territory already. And that's not a 46% difference. |

|

|

|

|

|

|

|

|

#549 |

|

Senior Member

Will Kernkamp

Join Date: Jun 2014

Posts: 371

Rep Power: 14  |

The performance of the machine with 1333 MT/s memory is so bad that there must be some setup issue. Probably uniform memory instead of numa aware if I had to guess.

|

|

|

|

|

|

|

|

|

#550 | |

|

Member

|

I guess Memory bandwidth is still the major bottleneck here, just not the theoretical one, but the actual bandwidth that the system manages to squeeze.

At leat for zen? CPUs, L3 has an impact on the actual bandwidth (at least when L3 is not impractically large), as a results large L3 CPUs always have an edge, even with identical RAM setups. Architecture evolution could also be relevant, I remember that zen3 (also Apple's M1?) could exhaust the bandwidth with much less threads compared with zen2. Quote:

|

||

|

|

|

||

|

|

|

#551 | |

|

New Member

Guangyu Zhu

Join Date: May 2013

Posts: 12

Rep Power: 13  |

Quote:

I tried the case posted by Phew on my Dual EPYC 7T83 setup: Hardware:2x AMD Epyc 7T83 (64 cores each, base freq. 2.45GHz, boost 3.5GHz), Gigabyte MZ72-HB0, 16x16GB DDR4-3200 (RDIMM, 2Rx4)and 1TB SUMSUNG PM981A NVME. Software: The official precompiled OpenFOAM v2112 running on Centos 7.5. BIOS: The bios were tuned according to your post and AMD official working load tunning for HPC. Almost exactly the same as you mentioned in the thread in spite of NUMA set to NPS4. I deployed the benchmark case posted by Will Kernkamp, and only modified the core numbers. The testing went smooth, but the results are pretty weird: # cores Wall time (s): ------------------------ 16 56.99 32 34.48 64 24.52 126 22.04 It's tooo slow compare with your result on 2x7453, I'm investigating the possible bottleneck but stucked... RAM? The RAM consumption of this case is small, even I only have 2GB for each core, should be enough for this case. Seems the bandwidth shouldn't be the bottleneck in this case. Software? I used the pre-compiled OpenFOAM package instead compile it myself. Would it have such a huge impact on computation speed? Would you please sugget some there any other possible clues? Best Regards, Guangyu |

||

|

|

|

||

|

|

|

#552 |

|

Super Moderator

Alex

Join Date: Jun 2012

Location: Germany

Posts: 3,427

Rep Power: 49   |

Memory should be fine. This case can be run with 16GB, probably even less. The binaries being precompiled can have some effect, but it should not be this severe.

I have zero experience with administrating CentOS, outside of using it on clusters. Maybe the default kernel of 7.5 is too old? I also used the backport kernel for OpenSUSE. You can post the output of "lscpu" and "numactl --hardware" Try running your case again on 64 cores manually. Only the solver run, not the meshing process. Clear caches beforehand by executing "echo 3 > /proc/sys/vm/drop_caches" as root. Then start the solver run with the command mpirun -np 64 --report-bindings --bind-to core --rank-by core --map-by numa simpleFoam -parallel > log.simpleFoam 2>&1 You can also observe which cores are loaded with htop. And check CPU frequencies with turbostat. |

|

|

|

|

|

|

|

|

#553 |

|

Senior Member

Will Kernkamp

Join Date: Jun 2014

Posts: 371

Rep Power: 14  |

The performance is close to half the performance that flotus gets, independent of the number of cores. Seems like a memory config problem. With the recommendations of flotus, you will find if your numa access is OK. In addition, there might be a bad DIMM. That can really ruin your performance. I don't think the older version of linux should make much of a difference. My performance has been pretty stable over time as I upgrade linux.

|

|

|

|

|

|

|

|

|

#554 | |

|

New Member

Guangyu Zhu

Join Date: May 2013

Posts: 12

Rep Power: 13  |

Hi, wkernkamp:

Quote:

============================================ Intel(R) Memory Latency Checker - v3.9a Command line parameters: --max_bandwidth Using buffer size of 100.000MiB/thread for reads and an additional 100.000MiB/thread for writes Measuring Maximum Memory Bandwidths for the system Will take several minutes to complete as multiple injection rates will be tried to get the best bandwidth Bandwidths are in MB/sec (1 MB/sec = 1,000,000 Bytes/sec) Using all the threads from each core if Hyper-threading is enabled Using traffic with the following read-write ratios ALL Reads : 359874.80 3:1 Reads-Writes : 320452.65 2:1 Reads-Writes : 312418.65 1:1 Reads-Writes : 306727.60 Stream-triad like: 325289.00 ============================================== The bandwidth between NUMA nodes are also fall in the normal range (unit of the attached fig is MiB/s)... EPYC 7T83 RAM READ BETWEEN NUMA NODES.jpg |

||

|

|

|

||

|

|

|

#555 | |

|

New Member

Guangyu Zhu

Join Date: May 2013

Posts: 12

Rep Power: 13  |

Quote:

Thanks for the suggestions. 1. The info from "lscpu" and "numactl --hardware" are listed below --------------------------------lscpu---------------------------- Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 128 On-line CPU(s) list: 0-127 Thread(s) per core: 1 Core(s) per socket: 64 Socket(s): 2 NUMA node(s): 16 Vendor ID: AuthenticAMD CPU family: 25 Model: 1 Model name: AMD EPYC 7T83 64-Core Processor Stepping: 1 CPU MHz: 1500.000 CPU max MHz: 2450.0000 CPU min MHz: 1500.0000 BogoMIPS: 4899.85 Virtualization: AMD-V L1d cache: 32K L1i cache: 32K L2 cache: 512K L3 cache: 32768K NUMA node0 CPU(s): 0-7 NUMA node1 CPU(s): 8-15 NUMA node2 CPU(s): 16-23 NUMA node3 CPU(s): 24-31 NUMA node4 CPU(s): 32-39 NUMA node5 CPU(s): 40-47 NUMA node6 CPU(s): 48-55 NUMA node7 CPU(s): 56-63 NUMA node8 CPU(s): 64-71 NUMA node9 CPU(s): 72-79 NUMA node10 CPU(s): 80-87 NUMA node11 CPU(s): 88-95 NUMA node12 CPU(s): 96-103 NUMA node13 CPU(s): 104-111 NUMA node14 CPU(s): 112-119 NUMA node15 CPU(s): 120-127 Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc art rep_good nopl nonstop_tsc extd_apicid aperfmperf eagerfpu pni pclmulqdq monitor ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw ibs skinit wdt tce topoext perfctr_core perfctr_nb bpext perfctr_l2 cpb cat_l3 cdp_l3 invpcid_single hw_pstate sme retpoline_amd ssbd ibrs ibpb stibp vmmcall fsgsbase bmi1 avx2 smep bmi2 invpcid cqm rdt_a rdseed adx smap clflushopt clwb sha_ni xsaveopt xsavec xgetbv1 cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local clzero irperf xsaveerptr arat npt lbrv svm_lock nrip_save tsc_scale vmcb_clean flushbyasid decodeassists pausefilter pfthreshold v_vmsave_vmload vgif umip pku ospke vaes vpclmulqdq overflow_recov succor smca ---------------------------------------------------------------------- -------------------------numactl --hardware----------------------- node 0 cpus: 0 1 2 3 4 5 6 7 node 0 size: 15824 MB node 0 free: 13502 MB node 1 cpus: 8 9 10 11 12 13 14 15 node 1 size: 16123 MB node 1 free: 14205 MB node 2 cpus: 16 17 18 19 20 21 22 23 node 2 size: 16125 MB node 2 free: 14553 MB node 3 cpus: 24 25 26 27 28 29 30 31 node 3 size: 16124 MB node 3 free: 14670 MB node 4 cpus: 32 33 34 35 36 37 38 39 node 4 size: 16125 MB node 4 free: 13966 MB node 5 cpus: 40 41 42 43 44 45 46 47 node 5 size: 16124 MB node 5 free: 14494 MB node 6 cpus: 48 49 50 51 52 53 54 55 node 6 size: 16125 MB node 6 free: 14987 MB node 7 cpus: 56 57 58 59 60 61 62 63 node 7 size: 16112 MB node 7 free: 14806 MB node 8 cpus: 64 65 66 67 68 69 70 71 node 8 size: 16125 MB node 8 free: 14596 MB node 9 cpus: 72 73 74 75 76 77 78 79 node 9 size: 16124 MB node 9 free: 13871 MB node 10 cpus: 80 81 82 83 84 85 86 87 node 10 size: 16125 MB node 10 free: 14409 MB node 11 cpus: 88 89 90 91 92 93 94 95 node 11 size: 16124 MB node 11 free: 13421 MB node 12 cpus: 96 97 98 99 100 101 102 103 node 12 size: 16109 MB node 12 free: 14917 MB node 13 cpus: 104 105 106 107 108 109 110 111 node 13 size: 16124 MB node 13 free: 14814 MB node 14 cpus: 112 113 114 115 116 117 118 119 node 14 size: 16125 MB node 14 free: 14130 MB node 15 cpus: 120 121 122 123 124 125 126 127 node 15 size: 16124 MB node 15 free: 14457 MB node distances: node 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 0: 10 11 12 12 12 12 12 12 32 32 32 32 32 32 32 32 1: 11 10 12 12 12 12 12 12 32 32 32 32 32 32 32 32 2: 12 12 10 11 12 12 12 12 32 32 32 32 32 32 32 32 3: 12 12 11 10 12 12 12 12 32 32 32 32 32 32 32 32 4: 12 12 12 12 10 11 12 12 32 32 32 32 32 32 32 32 5: 12 12 12 12 11 10 12 12 32 32 32 32 32 32 32 32 6: 12 12 12 12 12 12 10 11 32 32 32 32 32 32 32 32 7: 12 12 12 12 12 12 11 10 32 32 32 32 32 32 32 32 8: 32 32 32 32 32 32 32 32 10 11 12 12 12 12 12 12 9: 32 32 32 32 32 32 32 32 11 10 12 12 12 12 12 12 10: 32 32 32 32 32 32 32 32 12 12 10 11 12 12 12 12 11: 32 32 32 32 32 32 32 32 12 12 11 10 12 12 12 12 12: 32 32 32 32 32 32 32 32 12 12 12 12 10 11 12 12 13: 32 32 32 32 32 32 32 32 12 12 12 12 11 10 12 12 14: 32 32 32 32 32 32 32 32 12 12 12 12 12 12 10 11 15: 32 32 32 32 32 32 32 32 12 12 12 12 12 12 11 10 ---------------------------------------------------------------------- 2. The boost frequency under full loading is 3.0GHz, and jump to 3.2GHz under half loading (64 cores). Maximum boost frequncy reached 3.5GHz when using single core. The core usage in computation with 64 cores is attached, is this a balanced loading? core load.jpg 3. I have not run the case in "solver only" mode as suggested. But I found the openmpi version is quite old in the precompiled OF v2112 (v 1.10), could it be the reason of low compute speed? I'll try to compile the OF with the openmpi v4 and try again. |

||

|

|

|

||

|

|

|

#556 |

|

Super Moderator

Alex

Join Date: Jun 2012

Location: Germany

Posts: 3,427

Rep Power: 49   |

So you also have "ACPI SRAT L3 cache as NUMA domain" enabled. Otherwise there woudn't be 16 NUMA nodes.

Hardware looks fine, if there was a completely broken DIMM or slot we would see that in the output of numactl -hw. What's not looking good is thread binding, and that will be the single biggest contributor to your performance issues. If you run the solver with the command I posted earlier, you should get 4 threads per NUMA node. Right now the threads are not balanced across all numa nodes, which will slow things down. To be clear: you will not get the same level of performance I posted by running the script as provided. You have to take control of thread binding. |

|

|

|

|

|

|

|

|

#557 | |

|

New Member

Guangyu Zhu

Join Date: May 2013

Posts: 12

Rep Power: 13  |

Hi, Alex:

Quote:

binding cores.jpg # cores Wall time (s): ------------------------ 8 82.15 16 38.73 32 24.71 64 16.38 80 15.49 96 14.01 112 13.80 128 13.09 Below is the figure compare the 7T83's performance with and without core binding. Compare.jpg 7543 benefit a lot from its high frenquency, especially in low core situations. In current case, the speed up ratio growing slow after more than 64 cores, I will ran some tests to see the speed up ratio of 7T83 with larger models. Best Regards! |

||

|

|

|

||

|

|

|

#558 |

|

Super Moderator

Alex

Join Date: Jun 2012

Location: Germany

Posts: 3,427

Rep Power: 49   |

Looking good!

CPU core frequency between our CPUs should not differ vastly. For explaining the remainder of the diference, my money is on code optimization. I.e. me compiling the code on the machine it is run on, with the latest compilers, and machine-specific optimizations (march=znver3). The performance difference gets larger with lower core counts. That's the region where compiler optimizations can make a difference. For high core counts, the code becomes more memory bound, negating most of the optimizations the compiler can do. Also, for running on 8 cores with your current bios settings, you probably need a different command in order to use all available shared resources. I.e. one thread placed on every 16th core. OpenFOAM benchmarks on various hardware For a one-off, using an explicit cpu-list is probably the easiest solution. mpirun -np 8 --bind-to core --rank-by core --cpu-list 0,16,32,48,64,80,96,112 |

|

|

|

|

|

|

|

|

#559 | ||

|

Member

|

Quote:

Code:

mpirun -np $threads -parallel Quote:

) )And if core-binding alone could make that much a difference, I should seriously consider taking the extra effort every time runing a case.

|

|||

|

|

|

|||

|

|

|

#560 | |

|

Super Moderator

Alex

Join Date: Jun 2012

Location: Germany

Posts: 3,427

Rep Power: 49   |

I can't rerun the case since I no longer have access to this computer.

However... One of the goals for proper benchmarking is reducing variance. Controlling core binding is one necessary step along that route. Without it, results can be all over the place. It's just due to statistics that with this many threads and NUMA nodes, variance isn't actually that high, and the average drops significantly. Pulling average performance towards the maximum is a nice side-effect  Milan is not that much faster than Rome. We already have results from a Rome CPU that are closer to mine: OpenFOAM benchmarks on various hardware Quote:

|

||

|

|

|

||

|

|

|

Similar Threads

Similar Threads

|

||||

| Thread | Thread Starter | Forum | Replies | Last Post |

| How to contribute to the community of OpenFOAM users and to the OpenFOAM technology | wyldckat | OpenFOAM | 17 | November 10, 2017 16:54 |

| UNIGE February 13th-17th - 2107. OpenFOAM advaced training days | joegi.geo | OpenFOAM Announcements from Other Sources | 0 | October 1, 2016 20:20 |

| OpenFOAM Training Beijing 22-26 Aug 2016 | cfd.direct | OpenFOAM Announcements from Other Sources | 0 | May 3, 2016 05:57 |

| New OpenFOAM Forum Structure | jola | OpenFOAM | 2 | October 19, 2011 07:55 |

| Hardware for OpenFOAM LES | LijieNPIC | Hardware | 0 | November 8, 2010 10:54 |