|

|

|

[Sponsors] | |||||

They say the only constant in life is change and that’s as true for blogs as anything else. After almost a dozen years blogging here on WordPress.com as Another Fine Mesh, it’s time to move to a new home, the … Continue reading

The post Farewell, Another Fine Mesh. Hello, Cadence CFD Blog. first appeared on Another Fine Mesh.

Welcome to the 500th edition of This Week in CFD on the Another Fine Mesh blog. Over 12 years ago we decided to start blogging to connect with CFDers across teh interwebs. “Out-teach the competition” was the mantra. Almost immediately … Continue reading

The post This Week in CFD first appeared on Another Fine Mesh.

Automated design optimization is a key technology in the pursuit of more efficient engineering design. It supports the design engineer in finding better designs faster. A computerized approach that systematically searches the design space and provides feedback on many more … Continue reading

The post Create Better Designs Faster with Data Analysis for CFD – A Webinar on March 28th first appeared on Another Fine Mesh.

It’s nice to see a healthy set of events in the CFD news this week and I’d be remiss if I didn’t encourage you to register for CadenceCONNECT CFD on 19 April. And I don’t even mention the International Meshing … Continue reading

The post This Week in CFD first appeared on Another Fine Mesh.

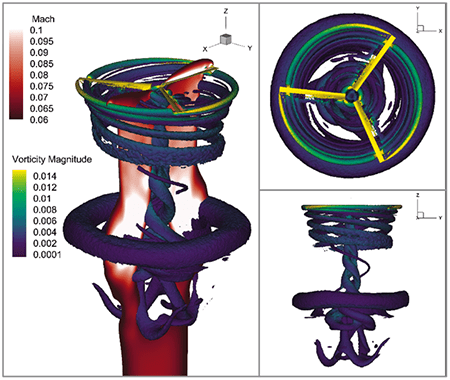

Some very cool applications of CFD (like the one shown here) dominate this week’s CFD news including asteroid impacts, fish, and a mesh of a mesh. For those of you with access, NAFEM’s article 100 Years of CFD is worth … Continue reading

The post This Week in CFD first appeared on Another Fine Mesh.

This week’s aggregation of CFD bookmarks from around the internet clearly exhibits the quote attributed to Mark Twain, “I didn’t have time to write a short letter, so I wrote a long one instead.” Which makes no sense in this … Continue reading

The post This Week in CFD first appeared on Another Fine Mesh.

I’m constantly fascinated by the intersections of art and fluid mechanics. In this video, we get an inside look at a French atelier making artist-grade pastels using centuries-old methods. And although the final product doesn’t appear to have much to do with fluids — compared to, say, paint — the process behind each pastel involves a lot of fluid mechanics: mixing, pressing, drying, and rolling. It’s a neat look at how a niche product gets made. (Video and image credit: Business Insider)

P.S. – Next week we’ll kick off our Paris Olympics coverage, but if you’d like a head start on the celebration, you can find our coverage of previous Olympics here. – Nicole

At times altocumulus cloud cover is pierced by circular or elongated holes, filled only with the wispiest of virga. These odd holes are known by many names: cavum, fallstreak holes, and hole punch clouds. Long-running debates about these clouds’ origins were put to rest some 14 years ago, after scientists showed they were triggered by airplanes passing through layers of supercooled droplets.

When supercooled, water droplets hang in the air without freezing, even though they are colder than the freezing point. This typically happens when the water is too pure to provide the specks of dust or biomass needed to form the nucleus of an ice crystal. But when an airplane passes through, the air accelerated over its wings gets even colder, dropping the temperature another 20 degrees Celsius. That is cold enough that, even without a nucleus, water drops will freeze. More and more ice crystals will form, until they grow heavy enough to fall, leaving behind a clear hole or wisps of falling precipitation.

In the satellite image above, flights moving in and out of Miami International Airport have left a variety of holes in the cloud cover each of them large enough to see from space! (Image credit: M. Garrison; research credit: A. Heymsfield et al. 2010 and A. Heymsfield et al. 2011; via NASA Earth Observatory)

The low sun angle in this astronaut photo of Junggar Basin shows off the wind- and water-carved landscape. Located in northwestern China, this region is covered in dune fields, appearing along the top and bottom of the image. The uplifted area in the top half of the image is separated by sedimentary layers that lie above the reddish stripe in the center of the photo. Look closely in this middle area, and you’ll find the meandering banks of an ephemeral stream. Then the landscape transitions back into sandy wind-shaped dunes. (Image credit: NASA; via NASA Earth Observatory)

When Kilauea‘s caldera collapsed in 2018, it came with a sequence of 12 closely-timed eruptions that did not match either of the typical volcanic eruption types. Usually, eruptions are either magmatic — caused by rising magma — or phreatic — caused by groundwater flash-boiling into steam. The data from Kilauea matched neither type.

Instead, scientists proposed a new model for eruption, based around a mechanism similar to the stomp-rockets that kids use. They suggested that, before the eruption, Kilauea’s magma reservoir contained a mixture of magma and a pocket of gas. When part of the magma reservoir collapsed, the falling rock compressed the gases in the chamber — much the way a child’s foot compresses the air reservoir of a stomp rocket — building up enough gas pressure to explosively launch debris and hot gas up to the surface.

The team found that computer simulations of this new eruption model matched well with observations and measurements taken at Kilauea in 2018. Kilauea is one of the most closely monitored volcanoes in the world; although the team suspects this mechanism occurs during caldera collapse of other volcanoes, it’s unlikely they could have pieced together such a convincing case for an eruption anywhere else. (Image credit: O. Holm; research credit: J. Crozier et al.; via Physics World)

From Earth, we rarely glimpse the violent flows of our home star. Here, a filament erupts from the photosphere creating a coronal mass ejection, captured in ultraviolet wavelengths by the Solar Dynamics Observatory. This particular eruption took place in 2012, and, while it was not aimed at the Earth, it did create auroras here a few days later. Eruptions like these occur as complex interactions between the sun’s hot, ionized plasma and its magnetic fields. Magnetohydrodynamics like these are particularly tough to understand because they combine magnetic physics, chemistry, and flow. (Image credit: NASA/GSFC/SDO; via APOD)

Like schools of fish, starlings gather in massive undulating crowds. Known as murmurations, these gatherings are a type of collective motion. Scientists often try to mimic these groups through simulations and lab experiments where individuals in a swarm obey simple rules that depend only on observing their neighbors. It requires very little, it turns out, to form swarms that move in this beautiful manner! (Video and image credit: J. van IJken; via Colossal)

|

Hi sakro,

Sadly my experience in this subject is very limited, but here are a few threads that might guide you in the right direction:

Best regards and good luck! Bruno |

<-- this dot is just a general sign for multiplication; both multiplication of scalars and scalar multiplication of vectors can be denoted by it; obviously, if I multiply vectors, I will denote them as vectors (i.e. with an arrow above), everything that doesn't have an arrow above is a scalar

<-- this dot is just a general sign for multiplication; both multiplication of scalars and scalar multiplication of vectors can be denoted by it; obviously, if I multiply vectors, I will denote them as vectors (i.e. with an arrow above), everything that doesn't have an arrow above is a scalar and

and  are tangent and cotangent respectively

are tangent and cotangent respectively is logarithm with the base of 10

is logarithm with the base of 10 is natural logarithm

is natural logarithm ,

,  and

and  are all the same thing

are all the same thing

is called diffusion velocity, see, e.g., general.H line 92

is called diffusion velocity, see, e.g., general.H line 92 is called drift velocity, see, e.g., general.H line 63

is called drift velocity, see, e.g., general.H line 63 is declared in the createFields.H file (see line 57), which is a part of interPhaseChangeFoam, and not the part of driftFluxFoam.

is declared in the createFields.H file (see line 57), which is a part of interPhaseChangeFoam, and not the part of driftFluxFoam.dnf install -y python3-pip m4 flex bison git git-core mercurial cmake cmake-gui openmpi openmpi-devel metis metis-devel metis64 metis64-devel llvm llvm-devel zlib zlib-devel ....

{

echo 'export PATH=/usr/local/cuda/bin:$PATH'

echo 'module load mpi/openmpi-x86_64'

}>> ~/.bashrc

cd ~ mkdir foam && cd foam git clone https://git.code.sf.net/p/foam-extend/foam-extend-4.1 foam-extend-4.1

{

echo '#source ~/foam/foam-extend-4.1/etc/bashrc'

echo "alias fe41='source ~/foam/foam-extend-4.1/etc/bashrc' "

}>> ~/.bashrc

pip install --user PyFoam

cd ~/foam/foam-extend-4.1/etc/ cp prefs.sh-EXAMPLE prefs.sh

# Specify system openmpi # ~~~~~~~~~~~~~~~~~~~~~~ export WM_MPLIB=SYSTEMOPENMPI # System installed CMake export CMAKE_SYSTEM=1 export CMAKE_DIR=/usr/bin/cmake # System installed Python export PYTHON_SYSTEM=1 export PYTHON_DIR=/usr/bin/python # System installed PyFoam export PYFOAM_SYSTEM=1 # System installed ParaView export PARAVIEW_SYSTEM=1 export PARAVIEW_DIR=/usr/bin/paraview # System installed bison export BISON_SYSTEM=1 export BISON_DIR=/usr/bin/bison # System installed flex. FLEX_DIR should point to the directory where # $FLEX_DIR/bin/flex is located export FLEX_SYSTEM=1 export FLEX_DIR=/usr/bin/flex #export FLEX_DIR=/usr # System installed m4 export M4_SYSTEM=1 export M4_DIR=/usr/bin/m4

foam Allwmake.firstInstall -j

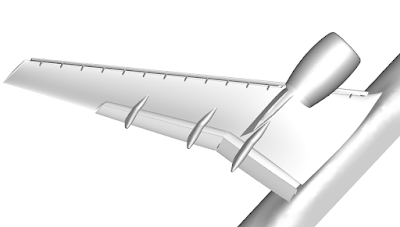

Figure 1: GridPro Version 9 Feature Image.

1532 words / 7 minutes read

In the ever-evolving landscape of grid generation, the goal of having an autonomous and reliable CFD simulation is the driving force behind progress. We’re thrilled to announce the release of GridPro Version 9, a major update that brings many new features, improvements, and powerful tools to empower users to achieve this vision. This release marks a significant milestone in our commitment to automate structured meshing. We have two new verticals released along with GridPro Version 9:

This article presents a few highlights. To learn more about other features packed in Version 9, Check out the release notes and What’s New.

To align ourselves with CFD_Vision_2030_Roadmap, we now use ESP as our modelling environment. With the introduction of this new environment, we aim to provide an adequate linkage between GridPro and the upfront CAD system. We have implemented a host of CAD creation tools, which enables users to create basic geometries in GridPro using the CAD panel.

As the first linking step in any Upfront CAD package, GridPro can import the labelling and grouping from any CAD package upstream through our improved STEP file format. The labels created in CAD software can be edited or inherited as surface and boundary labels in the mesh exported from GridPro, creating a seamless integration with the solver downstream.

In GridPro Version 9, labelling/grouping can also be used to split the underlying surface mesh. In the previous version, surfaces were split to improve the volume mesh quality based on the feature angles, but now users can also split surfaces by utilizing surface labels/groups. This saves time and reduces the manual effort required to select multiple surfaces for splitting purposes.

One of the significant challenges with traditional structured meshing is to dynamically update 3D blocking in the design and analysis of many engineering applications. Especially in scenarios involving shape optimization, moving boundary problems, etc. This requires the user to regenerate the blocks for every design change. It is a tedious and very time-consuming task to recreate a block structure after every design iteration.

GridPro, being a topology-based mesher, could readily accommodate geometry variations without any additional changes to the blocking. However, when geometries have non-uniform scaling, the parts of the block topology have to be moved close to the geometry to be mapped. This could become a time-consuming process, but with the introduction of the Block Mapping tool, the mapping can be done with a few clicks.

GridPro’s flexible topology paradigm enables users to create blocks without any restrictions. This sometimes results in the user creating poorly shaped blocks. Though the mesh generation engine smooths the poorly shaped blocks, it increases the mesh convergence time. With the new topology, smoother, irregularly placed blocking is now repositioned to provide a better intuition and speed up the meshing time. With this new feature, the time for grid generation is significantly reduced by an order of magnitude in many cases.

To improve hypersonic simulation workflows, GridPro introduces a Shock Alignment feature. This innovation adapts the grid blocks to the shock formed in a baseline solution. By splitting blocks in the shock region and aligning the grid normal to the shock surface, the algorithm enhances simulation accuracy and accelerates convergence. This advancement allows users to achieve faster and more precise results, optimizing their computational fluid dynamics analyses. With GridPro’s Shock Alignment, engineers and researchers can tackle complex hypersonic flows more efficiently and reliably. (Check out the paper published in the AIAA Hypersonics Conference: A Shock Fitting Technique For Hypersonic Flows Using Hexahedral Meshes.)

In GridPro, we have combined the benefits of unstructured meshing like local refinement, mesh adaptation, and multi-scale meshing by adapting the multi-block structure. This is done with a feature called Nesting, In this version we have released another flavour of nesting called the clamped nest. Clamped nesting aggressively refines the mesh near the geometry while coarsening it outside the region. This technique is particularly effective in creating highly refined regions, especially for LES and DNS simulations.

To speed up the block creation time for repeated geometries, an Array-block replication option is introduced. This provides the capability to replicate a topology in multiple directions. This tool is particularly advantageous when dealing with similar geometric patterns or shapes. Instead of creating the topology individually for each pattern, users can generate one pattern and seamlessly replicate it across self-similar geometric patterns in three different directions. Utilizing the Array feature, users can create blocking for a single periodic section and extend it in the X, Y, and Z directions.

Starting now, the UI enables the creation of higher-order meshes with ease. Users can choose their preferred higher-order format – quadratic, cubic, or quartic – and the tool will automatically adjust the density to the nearest multiple of the selected order for seamless mesh generation.

Users can also import internally or externally generated higher-order grids into the UI for visualization and quality assessment. The meshes can be observed in various modes, including Only Edges, Edges with Corners, Edges with Nodes, and Edges with All Nodes, allowing for a comprehensive examination.

Moreover, users can evaluate mesh quality parameters such as the Jacobian of the higher-order elements and compare them to the native linear mesh for detailed analysis.

The local block smoothing feature introduced in GridPro Version 9 provides the user a way to eliminate negative volumes ( folds) in the generated mesh locally. The local smoothing is a post-processing step which can be done in the grid to either improve the grid locally or to eliminate negative volumes.

The smoothing feature offers two schemes: Transfinite Interpolation (TFI) and Partial Differential Equation (PDE) smoothing. TFI-based smoothing is computationally less intensive, while PDE-based smoothing, despite its higher computational cost, proves more effective in areas with high curvature, producing meshes with fewer folds.

In version 9, we provide GUI options to harness the control features in the Grid Schedule function. These are designed to accelerate mesh smoothing by leveraging the multi-grid capabilities of structured meshes. Particularly beneficial for large topologies, the approach involves initially running the topology at a lower density. Once the corners are approximately smoothed and positioned, the smoother can be executed for higher densities, contributing to accelerated grid convergence.

Users can insert additional steps to further customize the smoothing process, effectively breaking down the process into multiple stages. After each step, the smoothing computations automatically resume from the previous state, ensuring a seamless and efficient progression.

Now, users can generate multiple Cut Planes, allowing them to create sectional views at various locations and directions. The Cut Plane, employed to clip a portion of a surface or grid, facilitates the examination of its interior, mainly when the area to be meshed is situated inside the surfaces. The enhanced feature of utilizing more than one Cut Plane significantly simplifies the assessment of topology and mesh in complex areas.

In version 9, we introduced the CAD and Meshing API with Python 3 support, empowering users to automate the meshing workflow with greater control. The updated API provides a comprehensive set of commands, including preprocessing and postprocessing operations. Repetitive tasks and batch operations can also be automated. This significantly reduces the user’s time spent on meshing and enhances productivity, particularly for new designs that follow similar workflows.

The new APIs can be tightly integrated with any CAD or optimization system, making them an excellent tool for automating topologically similar geometries. By leveraging the API, users can streamline their design process, ensuring efficient and consistent high-quality meshes and CFD results across different designs.

Upgrading to GridPro Version 9 ! Existing users can easily upgrade to the latest version, while new users can explore the enhanced capabilities by downloading the software from https://www.gridpro.com.

Visit our official website gridpro.com to download the latest version of GridPro!

To see these features in action, visit our Youtube Channel: GridPro Version 9 New Features Playlist.

As we continue to evolve and innovate, GridPro Version 9 reflects our commitment to providing you with the best tools and features to ease the workflow of mesh generation and accuracy of your CFD simulations. We believe these new features and tools will increase reliability and change how you mesh.

By subscribing, you'll receive every new post in your inbox. Awesome!

The post GridPro Version 9 Release Highlights! appeared first on GridPro Blog.

Figure 1: Turbine blade with winglet tips. Image source – Ref [1].

702 words / 3 minutes read

Winglet Tips are effective design modifications to minimize tip leakage flow and thermal loads in turbine blades. A reduction in leakage losses of up to 35-45 % has been reported.

The design and optimization of gas turbines is a crucial aspect of the energy industry. One aspect that has gained significant attention in recent years is the issue of tip leakage flow in gas turbines. Tip clearances, which are provided between the turbine blade tip and the stationary casing, allow free rotation of the blade and also accommodate mechanical and thermal expansions.

However, this narrow space becomes instrumental in the leakage of hot gases when the pressure difference between the pressure side and the suction side of the flow builds up. This is undesirable as it reduces the turbine efficiency and work output. According to some studies, tip leakage loss could account for one-third of the total aerodynamic loss in turbine rotors. Further, leakage flows bring in extra heat, which raises the blade tip metal temperature, thereby increasing the tip thermal load.

It is, therefore, essential to cool the blade tip and seal the leakage flow. Over the years, various design features have been proposed as a solution. One of the promising features employed in tip design is the use of winglets.

Winglet tips comprise of a blade tip with a central cavity and an outward extension of the cavity rim called the winglet. Different variants are developed based on the outward extent of the winglet, the length of the winglet and the location of the winglet. Figure 3 shows three winglet variants derived from the base geometry of the tip with a cavity. The first two have winglets on the suction side with different lengths, while the third one has a small winglet on the suction side as well as on the pressure side.

The flow pattern within the cavity of the winglet-cavity tip is similar to that in the cavity tip. On the blade pressure surface, the flow accelerates toward the trailing edge. On the blade suction surface, the flow accelerates till 60 percent of the tip chord and then decelerates toward the trailing edge. Near the leading edge of the blade tip, the flow enters the tip gap and impinges on the cavity floor of the tip, enhancing the local heat transfer. Then, a vortex forms along the suction side squealer. The vortex within the cavity is called a “cavity vortex.” It is also observed that the flow separates at the pressure-side tip edge, and most of the fluid exits the tip gap straight after entering the tip gap from the pressure-side inlet. Nevertheless, some fluid entering the tip gap mixes with the cavity vortex first and then exits the tip gap. The tip leakage flow exiting the gap rolls up to form a tip leakage vortex.

While generating meshes for leakage flow simulations, having a fine mesh in the leakage gap is critical. The narrow gap should be finely resolved with at least 40-50 layers of cells. In the tangential direction across the tip gap, 30 – 40 layers of cells are required to capture the winglet width and 150-160 cell layers to capture the tip gap from the suction side to the pressure side. Such a fine-resolution structured mesh will lead to a total cell count of about 7 to 9 million.

The boundary layer should be fully resolved with an estimated Y+ less than 1, using a slow cell growth rate of 1.1 to 1.2. Grid refinement studies with grids varying from 6 to 10 million have shown to decrease the tip average heat transfer coefficient by about 1.8 to 1.9% with every 2 million increase in cell count.

The average tip heat transfer coefficient (HTC) and total tip head load increase with an increase in tip gap. HTC is observed to be high on the pressure side winglet due to flow separation reattachment and also high on the side surface of the suction side winglet due to impingement of the tip leakage vortex.

Tip winglets are found to decrease tip leakage losses. Because of the long distance between the two squealer rims, the flow mixing inside the cavity is enhanced, and the size of the separation bubble at the top of the suction side squealer is increased, effectively reducing leakage loss. In a low-speed turbine, the winglet cavity tip is observed to reduce loss by 35-45% compared to a flat tip. When it comes to thermal performance, the tip gap size becomes a major influencing factor.

1. “Heat Transfer of Winglet Tips in a Transonic Turbine Cascade”, Fangpan Zhong et al., Article in Journal of Engineering for Gas Turbines and Power · September 2016.

2. “Tip gap size effects on thermal performance of cavity-winglet tips in transonic turbine cascade with endwall motion”, Fangpan Zhong et al., J. Glob. Power Propuls. Soc. | 2017, 1: 41–54.

3. “Turbine Blade Tip External Cooling Technologies”, Song Xue et al., Aerospace 2018, 5, 90.

4. “Aero-Thermal Performance of Transonic High-Pressure Turbine Blade Tips“, Devin Owen O’Dowd, St John’s College, PhD Thesis, Department of Engineering Science, University of Oxford, 2010.

By subscribing, you'll receive every new post in your inbox. Awesome!

The post Cooling the Hot Turbine Blade with Winglet Tips appeared first on GridPro Blog.

Figure 1: Turbine blade with squealer tips.

400 words / 2 minutes read

Revolutionary turbine blade tip designs are critical for minimizing tip leakage losses in gas turbines. Several innovative designs, such as flat tips, squealer tips, tips with winglets and honeycomb cavities, have shown the potential to mitigate this problem.

Turbine efficiency and performance can be improved by minimizing tip leakage losses in the blades. These losses are an unavoidable result of the flow passing through the narrow gap between the blade tip and shroud, mixing with the main flow and causing disruption in the flow pattern. The proportion of tip leakage losses to total aerodynamic loss can be as high as one-third, a significant amount that can reduce turbine output.

By reducing tip leakage losses, a turbine can operate more efficiently, resulting in lower fuel consumption, fewer emissions, and ultimately lower operating costs. In addition, it can prolong the lifespan of the turbine by reducing wear and tear on the blades and other components. Therefore, minimizing tip leakage losses is essential for optimizing the turbine’s performance and ensuring its long-term sustainability.

Gas turbines use tip clearances between the turbine blade tip and the stationary casing to prevent rubbing and accommodate expansions. Unfortunately, these clearances create aerodynamic losses and leakage, which reduce turbine efficiency and work output. Leakage through the clearance also adds extra heat, increasing the tip metal temperature and thermal load. In high-performance turbines, the tip leakage flow is intense, significantly impacting turbine performance. As a result, developing new or enhanced designs that cool the blade tip and seal the leakage flow is crucial. Proper tip clearance control is vital to optimizing gas turbine performance and output.

Over the years, various tip design features have been proposed as a solution, like flat tips, squealer tips, tips with winglets and honeycomb cavities, etc. Unfortunately, less clarity exists in understanding the dominant flow structure, affecting these tip designs’ aerodynamic benefits. Hence, numerical and experimental studies are conducted to study the effects of different tip designs on the aerodynamic performance and cooling requirements.

To learn more about innovative tip designs and their impact on turbine performance, consider reading these three articles:

By subscribing, you'll receive every new post in your inbox. Awesome!

The post Innovative Turbine Blade Tips to Reduce Tip Leakage Flow appeared first on GridPro Blog.

Figure 1: Structured mesh for honeycomb cells using GridPro.

475 words / 2 minutes read

Honeycomb tips offer a promising solution for mitigating tip leakage flows in turbines. These novel tip designs have shown to be highly effective in reducing tip leakage mass flow rate and aerodynamic losses, outperforming conventional flat tips.

The design of turbine blades, particularly the tips, plays a significant role in determining the efficiency and performance of turbines. Although an unavoidable issue, tip leakage flow can lead to reduced tip loads and increased aerodynamic losses through flow separation and mixing. With the increasing loads handled by high-performance turbines, the intensity of tip leakage flow becomes even more pronounced. To address this, new designs or improvements to existing designs are necessary. One promising solution is using honeycomb tips, which have proven to be effective in reducing the negative effects of tip leakage flow. This article will delve into the advantages of honeycomb tips and how they can improve the performance of high-performance turbines.

The concept of honeycomb tips is a relatively new and innovative approach to blade tip design. This design features multiple hexagonal cavities on the blade tip, usually numbering 60-70 and with a depth of a few millimeters.

Research has revealed that honeycomb tips have favourable characteristics for inhibiting tip leakage flow. Part of the leakage flow enters the hexagonal cavities and creates small vortices. These vortices mix with the upper leakage fluid, which leads to an increase in resistance in the clearance space, suppressing the tip leakage flow and reducing the size and intensity of the leakage vortex. Additionally, the use of honeycomb tips allows for a tighter build clearance compared to a flat tip, as contact is less damaging.

There are various modifications to the standard design of honeycomb tips. One such variation involves injecting coolant from the bottom of each hexagonal cavity to enhance heat transfer at the blade tip. Simulations have demonstrated that this design results in a reduction of up to 26.7% in the tip leakage mass flow rate and a decrease of approximately 4.6% in the total pressure loss coefficient compared to a flat tip.

Another variation of honeycomb tips is the composite honeycomb tip, which features an additional lower frustum in addition to the upper hexagonal prism. Studies have indicated that this design leads to a reduction of up to 16.81% in the tip leakage mass flow rate and a decrease of 5.49% in losses.

Thus, both variations of honeycomb tips show great promise in significantly enhancing the performance of turbine blades.

To effectively capture the effects of these tiny honeycomb cavities, finely resolved mesh is essential. The boundary layer needs to be fully resolved with Y+ around 1. With 60-70 cavities, the total cell count could reach 5-6 million, with each cavity requiring about 0.0075 million cells for proper discretization. Such a fine mesh is necessary to precisely capture the tip vortex and the small cavity vortices, as well as the interactions between them.

Honeycomb tips offer a promising solution for mitigating tip leakage flows in turbines. These novel tip designs have proven to be highly effective in reducing the tip leakage mass flow rate and aerodynamic losses, outperforming conventional flat tips. The cavity vortices created within the honeycomb design effectively control the leakage flow, making it a viable option for various clearance gaps. Overall, honeycomb tips have the potential to significantly improve the performance of turbines.

1. “Effect of clearance height on tip leakage flow reduced by a honeycomb tip in a turbine cascade”, Fu Chen et al., Proc IMechE Part G: J Aerospace Engineering 0(0) 1–13.

2. “Effect of cooling injection on the leakage flow of a turbine cascade with honeycomb tip”, Yabo Wang et al., Applied Thermal Engineering 133 (2018) 690–703.

3. “Parameter optimization of the composite honeycomb tip in a turbine cascade”, Yabo Wang et al., Energy, 22 February 2020.

By subscribing, you'll receive every new post in your inbox. Awesome!

The post Honeycomb Tips to Reduce Turbine blade Leakage Flows appeared first on GridPro Blog.

Figure 1: Turbine blade with squealer tips.

1005 words / 5 minutes read

Squealer tips solve various challenges in the turbine blade tip gap region, such as reducing tip leakage flow and protecting blade tips from high-temperature gases. Further, they also improve design clearance through their sealing properties.

To improve turbine aerodynamic performance, reducing tip leakage loss is important. However, due to the existing radial gap between the rotating blades and the stationary casing, tip leakage flow is inevitable. When the leakage flow mixes with the main flow, leakage losses occur, which can be as large as one-third of the total blade passage loss. This huge loss highlights the importance of controlling tip leakage loss for the efficient operation of turbines.

Efforts to minimize tip leakage losses are carried out by improving blade tip designs. Turbine blades come in two types – shrouded and unshrouded. It is in unshrouded blades where this tip leakage loss is prominent. So, many tip shape designs like flat tips, squealer tips, tips with winglets and honeycomb cavities, etc., are developed to reduce leakage loss. Out of these, squealer tips have gained the most attention because of their excellent aerodynamic performance.

Unfortunately, less clarity exists in understanding the dominant flow structure, which affects the aerodynamic benefits of the squealer tip. Hence, numerical and experimental studies are conducted to study the effects of different tip designs on the aerodynamic performance and cooling requirements.

Squealers comprise a boundary rim encircling the blade tip periphery and a central cavity. While doing parametric geometry optimization, the rim thickness and the cavity depth are varied to generate different variants, as shown in Figure 2a.

Squealer variants are also generated by varying the rim length along the blade tip periphery. Partial rims on the suction side are called suction-side squealers, and those on the pressure side are called pressure-side squealers, while squealers with rim running all along the tip periphery are called double-side squealers or simply squealers. Figure 2b shows this class of squealers.

Each variant uniquely modifies the flow to bring in favourable performance benefits. Moving from left to right in Figure 2a, the blade heat-flux increases with an increase in efficiency. Design A, with the rim removed at both the leading edge and trailing edge of the blade, provides the lowest heat flux. Design B, with increased suction side rim length, provides increased efficiency, while Design C, which is nothing but Design B with winglets or overhang, provides the highest efficiency.

The opening at the LE and TE bring in beneficial effects. The opening at the TE increases the strength of the cavity vortex and improves its sealing effectiveness. On the other hand, the opening at the LE allows some flow to enter the cavity, reducing the angle mismatch between the leakage and the main flow. Overall, the combination of LE and TE openings has a positive effect on heat transfer.

The squealer tip cavity is home to many vortices, such as the cavity vortex, scraping vortex and the corner vortex. When these vortices’ characteristics change, they alter the mixing of leakage flow inside the cavity and the separation bubble at the top of the suction side squealer. This modifies the controlling effect of the squealer tip on the leakage flow.

Out of the many vortices, the scraping vortex is the most dominant flow structure, which plays a critical role in leakage loss reduction. It forms an aero-labyrinth-like sealing effect inside the cavity. Through this effect, the scraping vortex increases the energy dissipation of leakage flow inside the gap and reduces the equivalent flow area at the gap outlet. The discharge coefficient of the squealer tip is therefore decreased, and the tip leakage loss is reduced accordingly.

So when we vary the blade tip load distribution and also the squealer geometry, the scraping vortex characteristics, such as the size, intensity and position inside the cavity, change, resulting in a different controlling effect on leakage loss.

Geometrically, the squealer height also matters. It has an optimum value, and any deviation from it will cause a reduction in the size of the scraping vortex, leading to lesser effectiveness in controlling the leakage loss.

Usually, hexahedral meshes are preferred for turbine blade simulations because of their low dissipation quality. An H-type topology is used for the main regions of the computational domain, while an O-type topology is used in the near vicinity of the blade wall and inside the tip gap. When doing simulations for multiple squealer tip variants, it is better to use the same topology and do minimal alteration only if needed to avoid numerical discrepancy.

The boundary layer needs to be fully resolved with Y+ less than one. Since the tip gap is the source for vortex generation, recirculation, flow separation and reattachment, it is quintessential to have a high resolution of the tip gap. Also, the point placement on the blade near the tip in the spanwise direction is made finer.

Before undertaking an optimisation study to find the best-performing squealer variant, it is better to conduct a grid independence study to eliminate the grid effect on the solution and also to converge on the optimal mesh number. Firstly, since the tip gap is of utmost importance, the radial mesh in the gap can be varied from 10 to 50 and analysed.

Figure 5a shows one such analysis where the difference in leakage flow rates is observed to be becoming smaller with an increase in mesh number. When the mesh number exceeds that of Grid 4, the change in leakage flow rate is less than 0.3%.

Figure 5b shows the flow field in the middle of the gap. As can be observed, the flow field in the gap doesn’t change beyond level Grid 4 refinement, with about 37 layers in the gap.

Figure 6 shows another plot of solution variation with grid refinement at the rotor outlet. With the increase in gap mesh numbers, the numerical discrepancies caused by different mesh numbers are gradually reduced. Carrying out such similar grid-independent analyses at other positions and directions will help us to converge on to a mesh with optimal refinement at all critical locations.

Squealer tip serves as an effective tool in reducing tip leakage flow. They also protect the blade tips from the full impact of the high-temperature leakage gases. Lastly, they act as a seal and help in achieving a tighter design clearance in the tip gap region.

By making a reasonable choice in geometric parameters and proper blade loading distribution, the scraping vortex in squealer tips can be better regulated to reduce tip leakage flow.

1. “Dominant flow structure in the squealer tip gap and its impact on turbine aerodynamic performance”, Zhengping Zou et al., Energy 138 (2017) 167-184.

2. “Numerical investigations of different tip designs for shroudless turbine blades”, Stefano Caloni et al., Proc IMechE Part A: J Power and Energy 2016, Vol. 230(7) 709–720.

3. “Aerodynamic Character of Partial Squealer Tip Arrangements In An Axial Flow Turbine”, Levent Kavurmacioglu et al., 200x Inderscience Enterprises Ltd.

4. “Aerothermal and aerodynamic performance of turbine blade squealer tip under the influence of guide vane passing wake”, Bo Zhang et al., Proc IMechE Part A: J Power and Energy 0(0) 1–20.

By subscribing, you'll receive every new post in your inbox. Awesome!

The post Effective Control of Turbine Blade Tip Vortices Using Squealer Tips appeared first on GridPro Blog.

Figure 1: Hexahedral mesh for HiFire6 vehicle with Busemann hypersonic intake.

1200 words / 6 minutes read

Hypersonic flow phenomena, such as shock waves, shock-boundary layer interactions, and laminar to turbulent transitions, necessitate flow-aligned, high-resolution hexahedral meshes. These meshes effectively discretize the flow physics regions, enabling accurate prediction of their impact on the flow.

In light of successful scramjet-powered hypersonic flight tests conducted by numerous countries, the pressure is mounting for other nations to keep up with this technology. Extensive testing and computational fluid dynamics (CFD) simulations are underway to develop a scramjet design capable of withstanding the demanding conditions of hypersonic flight.

As an effective and efficient design tool, CFD plays a pivotal role in rapidly designing and optimizing various parametric scramjet configurations. However, simulating these extreme flow fields using CFD is a formidable challenge, and proper meshing is of utmost importance.

The meshing requirements for CFD of hypersonic flows in intakes differ significantly from those for low Mach number flows. High-speed flows involve elevated temperatures and interactions between shockwaves and boundary layers, which were previously negligible. Boundary layers are particularly critical as they experience high rates of heat transfer. Furthermore, the transition of the boundary layer from laminar to turbulent flow is a complex phenomenon that is challenging to capture and simulate accurately. Nonetheless, this transition is of paramount importance, as it has a profound impact on flow behaviour.

Change in the flow field demands a change in meshing requirements. As one may expect, the boundary layer should have a high resolution to capture the velocity boundary layer and the enthalpy boundary layer. Next, the shocks must also be captured precisely since the flow turns through the shock wave in hypersonic flows. But more importantly, shocks have extremely strong gradients, which can lead to large errors if not resolved accurately.

Multiple shocks and boundary layer interactions happen in hypersonic intake flows at different locations. If these effects are not resolved precisely, it is impossible to predict whether the hypersonic engine works effectively or not. To summarise, we must deal with multiple effects with different strength levels. The gridding system we adopt should create a grid that adequately resolves all effects with sufficient precision to achieve the needed level of solution reliability.

Other regions of concern in scramjet are the inlet leading edge, injector and cavity. Not only does the mesh topology have to be appropriately structured around these regions, but it must also align with the surfaces as best as possible to avoid introducing unnecessary skewing and warpage.

The boundary layer, a home for laminar to turbulent transitions and shock-induced boundary layer separation, must be properly resolved. Usually, structured meshes are preferred. Even the hybrid unstructured approach adopts finely resolved stacked prism or hexahedral cells in viscous padding.

This is necessary because resolving the boundary layer close to the wall aids in accurately representing its profile, leading to correct predictions of wall shear stress, surface pressure and the effect of adverse pressure gradients and forces.

Further, at hypersonic speeds, the transition of laminar to turbulent boundary layer inside the boundary layer significantly influences aircraft aerodynamic characteristics. It affects the thermal processes, the drag coefficient and the vehicle lift-to-drag ratio. Hence, paying attention to how well the cells are arranged in the boundary layer padding is critically essential.

Another important aspect of the proper resolution of the boundary layer is how it helps predict shock-induced flow separation. Shock wave interaction with a turbulent boundary layer generates significant undesirable changes in local flow properties, such as increased drag rise, large-scale flow separation, adverse aerodynamic loading and heating, shock unsteadiness and poor engine inlet performance.

Unsteadiness induces substantial variations in pressure and shear stress, leading to flutter that impacts the integrity of aircraft components. Additionally, the operational efficiency of engines can be considerably compromised if the shock-wave-induced boundary layers separation deviates from the anticipated location. If the computational grid fails to accurately represent the interaction between shock waves and boundary layers due to inadequate resolution or improper cell placement, the obtained results from CFD will lack practical utility or advantages. This underscores the critical significance of well-designed grids in the context of hypersonic flows.

Ideally, grid lines need to be aligned to the shock shape. For this, hexahedral meshes are better suited. They can be tailored to the shock pattern and made finer in the direction normal to the shock or adaptively refined. This brings the captured shock thickness closer to its physical value and improves the solution quality by aligning the faces of the control volumes with the shock front. Shock-aligned grids reduce the numerical errors induced by the captured shock waves, thereby significantly enhancing the computed solution quality in the entire region downstream of the shock.

This grid alignment is necessary for both oblique and normal bow shock. Grid studies have shown that solver convergence is extremely sensitive to the shape of the O-grid at the stagnation point. Matching the edge of the O-grid with the curved standing shock and maintaining cell orthogonality at the walls was necessary to get good convergence.

Also, grid misalignment is observed to generate non-physical waves, as shown in Figure 7. For CFD solvers with low numerical dissipation, a strong shock generates spurious waves when it goes through a ‘cell step’ or moves from one cell to another. Such numerical artefacts can be avoided, or at least the strength of the spurious waves can be minimized by reducing the cell growth ratio and cell misalignment w.r.t the shock shape.

A sparser grid density may suffice in areas where flow is uniform and surfaces have slight curvatures. Nevertheless, it becomes necessary to employ grid clustering and increase the resolution in regions characterized by abrupt flow gradients, geometric or topological variations, regions accommodating critical flow phenomena (such as near walls, shear and boundary layers, shock interactions), geometric cavities, injectors, and other solid structures. The appropriate refinement of these regions holds significance as it contributes to enhancing the efficacy of numerical schemes and models at both local and global levels. Consequently, this refinement leads to the generation of more precise and reliable results.

When employing a solution-based grid adaptation approach, the selection of an appropriate refinement ratio and initial grid density becomes crucial. If the refinement ratio is too low, it may be inefficient and ineffective. This is due to the limited coverage of the asymptotic region, which may not be sufficient to accurately determine the convergence behaviour. Additionally, it may necessitate multiple flow solutions before reaching a valid conclusion.

Another aspect which needs due attention while making grid adaptation is the initial grid employed. The initial grid should possess a sufficient level of resolution. Employing a low initial grid density can lead to inaccurate simulation results and unsatisfactory flow field solutions. On the other hand, an excessively refined initial grid may not be feasible for high-fidelity studies involving viscous, turbulent or fully reacting flows. This is because the initial cell density may already be too high, making creating subsequent grids with even higher densities impractical.

Grid accuracy plays a critical role in the reliability and precision of hypersonic CFD simulations, as it directly influences the computed flow field. Given the high velocities involved, errors introduced upstream can rapidly amplify downstream.

Consequently, it is imperative to employ a meticulous grid or topology design to achieve suitable cell discretization and blocking structures. Factors such as grid resolution, grid clustering, cell shape, and cell size distribution must be thoroughly evaluated and selected both locally and across the entire domain. This careful assessment is essential for preventing the introduction of errors and inaccuracies into the computed results through numerical artefacts and uncaptured phenomena.

1.“Experimental Study of Hypersonic Fluid-Structure Interaction with Shock Impingement on a Cantilevered Plate”, Gaetano M D Currao, PhD Thesis, UNSW AUSTRALIA, March 2018.

2.“Investigation of “6X” Scramjet Inlet Configurations”, Stephen J. Alter, NASA/TM–2012–217761, September 2012.

3.“Numerical Simulation of Hypersonic Air Intake Flow in Scramjet Propulsion Using a Mesh-Adaptive Approach”, Sarah Frauholz, et al, AIAA Conference Paper · September 2012.

4.“Parametric Geometry, Structured Grid Generation, and Initial Design Study for REST-Class Hypersonic Inlets”, Paul G. Ferlemann et al.

5.“Numerical Simulation of Hypersonic Air Intake Flow in Scramjet Propulsion”, Sarah Frauholz et al, 5TH EUROPEAN CONFERENCE FOR AERONAUTICS AND SPACE SCIENCES (EUCASS), July 2013.

6.”Computational Prediction of NASA Langley HYMETS Arc Jet Flow with KATS”, Umran Duzel,AIAA conference paper, Jan 2018.

7.“Numerical simulations of the shock wave-boundary layer interactions”, Ismaïl Ben Hassan Saïdi, HAL Id: tel-02410034, 13 Dec 2019.

8.“The Role of Mesh Generation, Adaptation, and Refinement on the Computation of Flows Featuring Strong Shocks”, Aldo Bonfiglioli et al, Hindawi Publishing Corporation Modelling and Simulation in Engineering, Volume 2012, Article ID 631276.

9.”Numerical Investigation of Compressible Turbulent Boundary Layer Over Expansion Corner“, Tue T.Q. Nguyen et al., AIAA Conference Paper, October 2009.

By subscribing, you'll receive every new post in your inbox. Awesome!

The post Know your mesh for Hypersonic Intake CFD Simulations appeared first on GridPro Blog.

Here are instructions on how to import a surface CSV file from Stallion 3D into ParaView using the Point Dataset Interpolator:*

In Stallion 3D

Open ParaView.

Convert CSV to points:

Load the target surface mesh:

Apply Point Dataset Interpolator:

5. Visualize:

Take flight with your next project! Hanley Innovations offers powerful software solutions for airfoil design, wing analysis, and CFD simulations.

Here's what's taking off:

Hanley Innovations: Empowering engineers, students, and enthusiasts to turn aerodynamic dreams into reality.

Ready to soar? Visit www.hanleyinnovations.com and take your designs to new heights.

Stay tuned for more updates!

#airfoil #cfd #wingdesign #aerodynamics #iAerodynamics

Stallion 3D has updated features for accurate analysis

Stallion 3D 5.0 provides state of the art external aerodynamic analysis of your 3D designs directly on your Windows PC or Laptop. It will run on Windows 7 to 11.

Find out more by visiting https://www.hanleyinnovations.com/stallion3d.html

Why use 3DFoil 🤔

There are several reasons why you might use 3DFoil for wing analysis:

Here are some specific examples of when you might want to use 3DFoil:

If you are looking for an accurate, fast, and easy-to-use wing analysis software package, 3DFoil is a good option to consider.

Here are some additional benefits of using 3DFoil:

Overall, 3DFoil is a good choice for wing analysis if you are looking for an accurate, fast, and easy-to-use software package.

More information about 3DFoil can be found at: https://www.hanleyinnovations.com/3dfoil.html

Stallion 3D is an aerodynamics analysis software package that can be used to analyze golf balls in flight. The software runs on MS Windows 10 & 11 and can compute the lift, drag and moment coefficients to determine the trajectory. The STL file, even with dimples, can be read directly into Stallion 3D for analysis.

What we learn from the aerodynamics:

Stallion 3D strengths are:

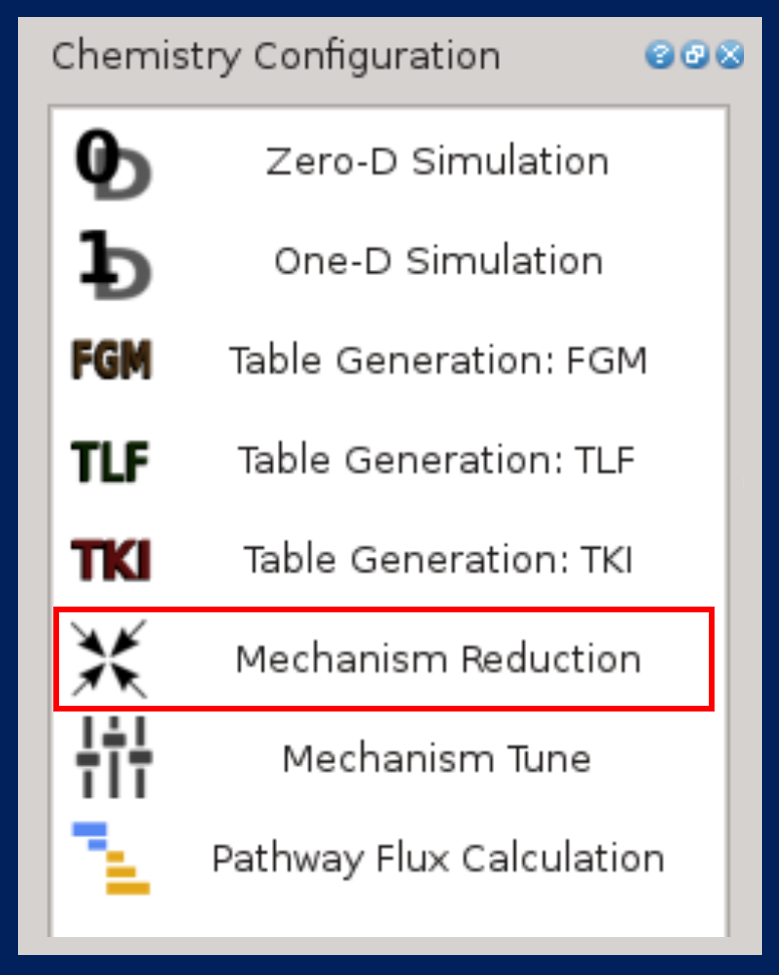

In the computation of turbulent flow, there are three main approaches: Reynolds averaged Navier-Stokes (RANS), large eddy simulation (LES), and direct numerical simulation (DNS). LES and DNS belong to the scale-resolving methods, in which some turbulent scales (or eddies) are resolved rather than modeled. In contrast to LES, all turbulent scales are modeled in RANS.

Another scale-resolving method is the hybrid RANS/LES approach, in which the boundary layer is computed with a RANS approach while some turbulent scales outside the boundary layer are resolved, as shown in Figure 1. In this figure, the red arrows denote resolved turbulent eddies and their relative size.

Depending on whether near-wall eddies are resolved or modeled, LES can be further divided into two types: wall-resolved LES (WRLES) and wall-modeled LES (WMLES). To resolve the near-wall eddies, the mesh needs to have enough resolution in both the wall-normal (y+ ~ 1) and wall-parallel directions (x+ and z+ ~ 10-50) in terms of the wall viscous scale as shown in Figure 1. For high-Reyolds number flows, the cost of resolving these near-wall eddies can be prohibitively high because of their small size.

In WMLES, the eddies in the outer part of the boundary layer are resolved while the near-wall eddies are modeled as shown in Figure 1. The near-wall mesh size in both the wall-normal and wall-parallel directions is on the order of a fraction of the boundary layer thickness. Wall-model data in the form of velocity, density, and viscosity are obtained from the eddy-resolved region of the boundary layer and used to compute the wall shear stress. The shear stress is then used as a boundary condition to update the flow variables.

During the past summer, AIAA successfully organized the 4th High Lift Prediction Workshop (HLPW-4) concurrently with the 3rd Geometry and Mesh Generation Workshop (GMGW-3), and the results are documented on a NASA website. For the first time in the workshop's history, scale-resolving approaches have been included in addition to the Reynolds Averaged Navier-Stokes (RANS) approach. Such approaches were covered by three Technology Focus Groups (TFGs): High Order Discretization, Hybrid RANS/LES, Wall-Modeled LES (WMLES) and Lattice-Boltzmann.

The benchmark problem is the well-known NASA high-lift Common Research Model (CRM-HL), which is shown in the following figure. It contains many difficult-to-mesh features such as narrow gaps and slat brackets. The Reynolds number based on the mean aerodynamic chord (MAC) is 5.49 million, which makes wall-resolved LES (WRLES) prohibitively expensive.

|

| The geometry of the high lift Common Research Model |

University of Kansas (KU) participated in two TFGs: High Order Discretization and WMLES. We learned a lot during the productive discussions in both TFGs. Our workshop results demonstrated the potential of high-order LES in reducing the number of degrees of freedom (DOFs) but also contained some inconsistency in the surface oil-flow prediction. After the workshop, we continued to refine the WMLES methodology. With the addition of an explicit subgrid-scale (SGS) model, the wall-adapting local eddy-viscosity (WALE) model, and the use of an isotropic tetrahedral mesh produced by the Barcelona Supercomputing Center, we obtained very good results in comparison to the experimental data.

At the angle of attack of 19.57 degrees (free-air), the computed surface oil flows agree well with the experiment with a 4th-order method using a mesh of 2 million isotropic tetrahedral elements (for a total of 42 million DOFs/equation), as shown in the following figures. The pizza-slice-like separations and the critical points on the engine nacelle are captured well. Almost all computations produced a separation bubble on top of the nacelle, which was not observed in the experiment. This difference may be caused by a wire near the tip of the nacelle used to trip the flow in the experiment. The computed lift coefficient is within 2.5% of the experimental value. A movie is shown here.

|

| Comparison of surface oil flows between computation and experiment |

|

| Comparison of surface oil flows between computation and experiment |

Multiple international workshops on high-order CFD methods (e.g., 1, 2, 3, 4, 5) have demonstrated the advantage of high-order methods for scale-resolving simulation such as large eddy simulation (LES) and direct numerical simulation (DNS). The most popular benchmark from the workshops has been the Taylor-Green (TG) vortex case. I believe the following reasons contributed to its popularity:

Using this case, we are able to assess the relative efficiency of high-order schemes over a 2nd order one with the 3-stage SSP Runge-Kutta algorithm for time integration. The 3rd order FR/CPR scheme turns out to be 55 times faster than the 2nd order scheme to achieve a similar resolution. The results will be presented in the upcoming 2021 AIAA Aviation Forum.

Unfortunately the TG vortex case cannot assess turbulence-wall interactions. To overcome this deficiency, we recommend the well-known Taylor-Couette (TC) flow, as shown in Figure 1.

Figure 1. Schematic of the Taylor-Couette flow (r_i/r_o = 1/2)

The problem has a simple geometry and boundary conditions. The Reynolds number (Re) is based on the gap width and the inner wall velocity. When Re is low (~10), the problem has a steady laminar solution, which can be used to verify the order of accuracy for high-order mesh implementations. We choose Re = 4000, at which the flow is turbulent. In addition, we mimic the TG vortex by designing a smooth initial condition, and also employing enstrophy as the resolution indicator. Enstrophy is the integrated vorticity magnitude squared, which has been an excellent resolution indicator for the TG vortex. Through a p-refinement study, we are able to establish the DNS resolution. The DNS data can be used to evaluate the performance of LES methods and tools.

Figure 2. Enstrophy histories in a p-refinement study

Happy 2021!

The year of 2020 will be remembered in history more than the year of 1918, when the last great pandemic hit the globe. As we speak, daily new cases in the US are on the order of 200,000, while the daily death toll oscillates around 3,000. According to many infectious disease experts, the darkest days may still be to come. In the next three months, we all need to do our very best by wearing a mask, practicing social distancing and washing our hands. We are also seeing a glimmer of hope with several recently approved COVID vaccines.

2020 will be remembered more for what Trump tried and is still trying to do, to overturn the results of a fair election. His accusations of wide-spread election fraud were proven wrong in Georgia and Wisconsin through multiple hand recounts. If there was any truth to the accusations, the paper recounts would have uncovered the fraud because computer hackers or software cannot change paper votes.

Trump's dictatorial habits were there for the world to see in the last four years. Given another 4-year term, he might just turn a democracy into a Trump dictatorship. That's precisely why so many voted in the middle of a pandemic. Biden won the popular vote by over 7 million, and won the electoral college in a landslide. Many churchgoers support Trump because they dislike Democrats' stances on abortion, LGBT rights, et al. However, if a Trump dictatorship becomes reality, religious freedom may not exist any more in the US.

Is the darkest day going to be January 6th, 2021, when Trump will make a last-ditch effort to overturn the election results in the Electoral College certification process? Everybody knows it is futile, but it will give Trump another opportunity to extort money from his supporters.

But, the dawn will always come. Biden will be the president on January 20, 2021, and the pandemic will be over, perhaps as soon as 2021.

The future of CFD is, however, as bright as ever. On the front of large eddy simulation (LES), high-order methods and GPU computing are making LES more efficient and affordable. See a recent story from GE.

|

| Figure 1. Various discretization stencils for the red point |

|

| p = 1 |

|

| p = 2 |

|

| p = 3 |

|

|

CL

|

CD

|

|

p = 1

|

2.020

|

0.293

|

|

p = 2

|

2.411

|

0.282

|

|

p = 3

|

2.413

|

0.283

|

|

Experiment

|

2.479

|

0.252

|

Author:

Allie Yuxin Lin

Marketing Writer

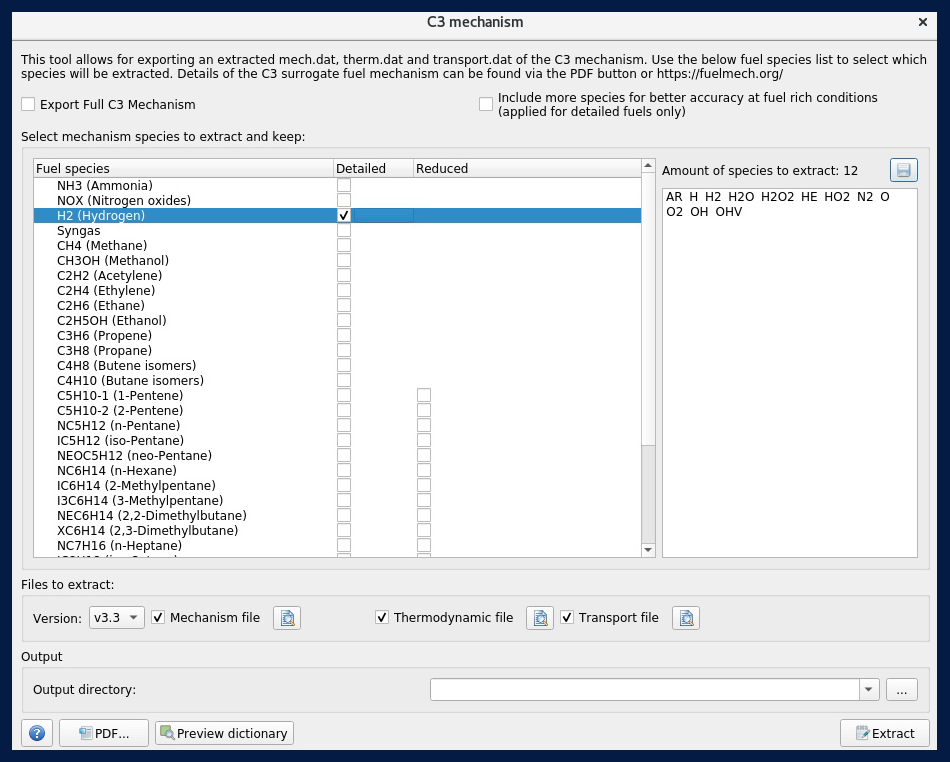

Imagine you’re a CFD engineer and you want to run a combustion simulation for a certain kind of reacting flow device. But before you can do that, you need to find a chemical mechanism that can mathematically represent the chemistry within the reacting fluid. So you scour the available literature to find published mechanisms from third parties that fit your case conditions. This time-consuming and inefficient process prompted us, and other like-minded individuals across academia and industry, to seek a more consolidated alternative.

The Computational Chemistry Consortium (C3), the brainchild of Convergent Science owners Kelly Senecal, Dan Lee, Eric Pomraning, and Keith Richards, was established with the goal of creating a comprehensive and detailed mechanism that would serve as an all-inclusive solution for fuel combustion chemistry. Creating this repository of mechanisms would also help us investigate and develop alternative fuels to create more sustainable technologies. Professor Henry Curran from the University of Galway leads the consortium from the technical side, working with research groups whose respective areas of expertise complement each other, including the University of Galway, Lawrence Livermore National Laboratory, Argonne National Laboratory, Politecnico di Milano, and RWTH Aachen University.

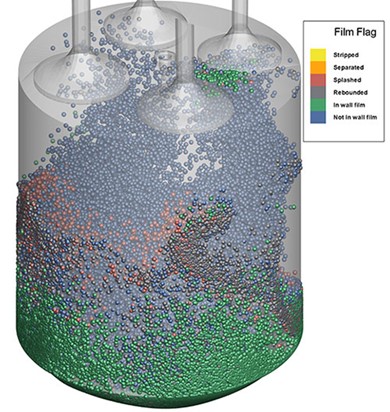

The summer of 2018 marked a milestone in combustion chemistry, as C3 officially kicked off. Following the directional guidance from a diverse group of industry partners, C3 develops chemical mechanisms that include pollutant chemistry like PAH and NOx, creates tools for generating surrogate and multi-fuel mechanisms, and improves reduction and merging tools. C3 operates with a top-down approach, featuring one large mechanism from which users can extract the specific chemistry for their fuel. This method allows C3’s technical team to validate the mechanism as a whole, rather than combine many small, independently-validated mechanisms. In December 2021, C3 published the first version of their mechanism, making it widely available to the combustion community. Since then, the mechanism has been integrated into our software, allowing you to combine the flexibility of C3 with the power of CONVERGE.

To generate your fuel chemistry mechanism with CONVERGE, start by identifying all the individual components for your fuel surrogate. CONVERGE offers a surrogate blender tool where you can specify fuel properties such as viscosity, H/C ratio, octane number, distillation data, and ignition delay. The blender tool will then use mixing rules to match the specified fuel properties and come up with a fuel surrogate. Alternatively, the experienced user may choose to handpick certain fuel species according to information laid out in a test fuel’s spec sheet.

After you’ve identified your fuel surrogate, you can use the extraction tool in CONVERGE Studio, which was designed specifically for the purpose of extracting fuel chemistry from the parent C3 mechanism.

In most cases involving traditional hydrocarbon fuels, your extracted mechanism will have hundreds to thousands of species, which is far too many to use for a 3D CFD simulation. To ensure computational efficiency while maintaining solution accuracy, you should reduce your mechanism to a manageable size using CONVERGE’s mechanism reduction process.

A key component of this process in CONVERGE is the analysis of autoignition, extinction, speciation, and/or laminar flamespeed simulations. Therefore, before you can begin your reduction process, you must consider the specific conditions of your engine/combustor under which these simulations are evaluated. These operating conditions include pressure, unburnt temperature, equivalence ratio, and EGR fractions. For example, if your mechanism is meant to be used for a diesel engine simulation, you must select a pressure range from the start of injection to peak cylinder pressure.

To reduce the number of species, a directed relation graph (DRG) will be constructed and error propagation (DRGEP) can be added for further precision. The DRGEP methodology works to remove species and corresponding reactions within the user-specified error bounds of ignition delay, extinction, speciation, and/or laminar flamespeed. Once the number of species is ~500, sensitivity analysis (SA) can be added to the existing DRGEP methodology for further reduction of species. The optimal resulting mechanism will have calculations that fall within a user-specified range and a reduced number of species, making these mechanisms practical for 3D combustion simulations.

When you have obtained the optimal reduced mechanism, the reaction rates of the most sensitive reactions can be tuned to match specific targets of this mechanism to those of the parent mechanism. Similarly to the reduction process, these targets are speciation, extinction, laminar flamespeed, and/or ignition delay. You can tune your mechanism using CONVERGE’s mechanism tuning tools, such as NLOPT, an open-source library for nonlinear local and global optimization; the MONTE-CARLO method, which uses randomization to solve problems that may be deterministic in principle; or CONGO, CONVERGE’s in-house genetic algorithm optimization tool. These methods focus on the pre-exponential factor, A, or the activation energy in the Arrhenius reaction equation.

After completing these steps, your reduced chemical mechanism is ready to be run in 3D CFD simulations. The flexibility, versatility, and ingenuity of C3 simplifies the process of modeling both traditional and alternative fuels in a variety of applications where combustion is involved. With C3, your days of manually searching through the literature for a specific mechanism are over. Welcome to a new era of ease!

If you would like to help set the direction of future C3 efforts and have access to our mechanisms before they are publicly available, we invite you to join our consortium. To learn more, please contact C3 Director Dr. Kelly Senecal at senecal@fuelmech.org.

Author:

Allie Yuxin Lin

Marketing Writer

The trials of climate change and humanity’s desire to mitigate our carbon footprint is motivating the research and development of renewable technologies for the transportation and energy sectors. Hydrogen is a promising technology, with the potential to address issues in energy security, pollution, emissions reduction, and sustainability. Hydrogen is carbon free, abundant, and can be stored as a gas or a liquid, making it an important player in the transition toward a cleaner planet.

However, the challenges associated with devising safe and reliable storage methods delay the increase of hydrogen production. Hydrogen’s highly diffusive and corrosive nature makes it prone to leaking, while its unique thermodynamic properties, such as the negative Joule-Thompson effect, require engineers to rethink traditional storage infrastructure. Hydrogen’s low compressibility and extreme sensitivity to the environment mean tanks should both be strong enough to withstand high pressures and flexible enough to handle large temperature fluctuations. Tank design should also account for hot pockets formed due to the increased pressure when hydrogen is compressed, which could damage the tank’s structural integrity.

Computational fluid dynamics (CFD) can help overcome some of the hurdles associated with hydrogen storage. CFD provides insight into the behavior of various fluids and gasses in different environments, so it can be used to optimize the design of hydrogen fuel systems, including fuel cells, storage tanks, and delivery systems. CFD can be used to identify areas of improvement in the fuel system design and help pinpoint potential safety hazards.

CONVERGE is a powerful CFD software whose unique capabilities make it advantageous for simulating hydrogen storage. Autonomous meshing removes the mesh generation bottleneck, while Adaptive Mesh Refinement (AMR) continuously adjusts the mesh throughout the simulation. Conjugate heat transfer (CHT) modeling solves for the heat exchange between the fluids and solids in the system. Additionally, CONVERGE provides multiple turbulence models to efficiently capture the flow dynamics within the storage unit.

The HyTransfer project1 was funded by the European Union to study the physics in the hydrogen filling process, with the hopes of providing guidelines on how to achieve an efficient filling strategy. Using the framework and the experimental data publicly available for the HyTransfer project, we performed a validation study to showcase the value of CONVERGE in hydrogen storage.

The Hexagon 36 L Type IV tank consists of a polymer liner encased in a composite wrapping, providing the necessary structural length to withstand large pressures up to 70 MPa. A liner thickness of 4 mm and an injector diameter of 10 mm were chosen for the study. In line with existing literature,2,3,4 we simulated half the horizontal tank domain, reducing overall computational cost. We provided the inlet mass flow rate profile and monitored the development of the tank pressure from the initial state.

CONVERGE’s graphical user interface, CONVERGE Studio, allows users to take advantage of a wide variety of geometry manipulation and repair tools during case setup. Since hydrogen’s behavior is known to deviate from ideal gas representations, CONVERGE provides the option to use real gas properties.

With the density-based PISO solver, we were able to achieve rapid convergence while simultaneously capturing the heat fluxes between different regions with CONVERGE’s CHT analysis.

As the injector diameter used for hydrogen tanks typically ranges from 3–10 mm, the incoming flow velocity can reach up to 300 m/s. High jet penetration plays a critical role in maintaining circulation within the tank, and mesh embedding used in conjunction with CONVERGE’s AMR can capture the jet profile with a high degree of accuracy.

To get the appropriate jet penetration and reduce the spreading over-prediction, the RNG k-epsilon constant in the turbulence model was modified according to past research.2,3,4

Figure 1 shows the velocity contours of the hydrogen jet during the filling process. The velocity decreases rapidly as the filling progresses due to the compression of hydrogen. The flapping motion of the jet is related to flow circulation within the tank, which is important when considering the redistribution of thermal gradients. In our study, this circulation caused hot pockets to form near the injection side of the tank.

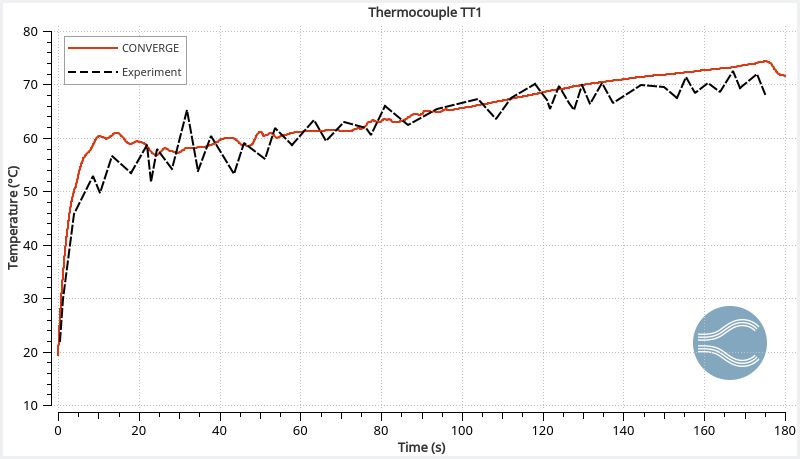

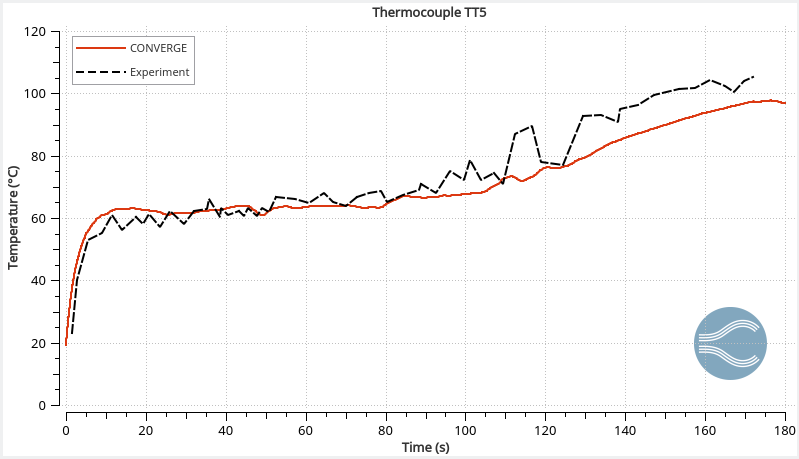

The temperature profiles within the tank agree with the experimental data (Figure 2), demonstrating CONVERGE can capture the complex thermodynamics of hydrogen. The reading at thermocouple 5 (TT5) is higher than thermocouple 1 (TT1) because long filling times can cause thermal stratification. As the filling progresses, the jet velocity decreases and the circulation within the tank stabilizes.

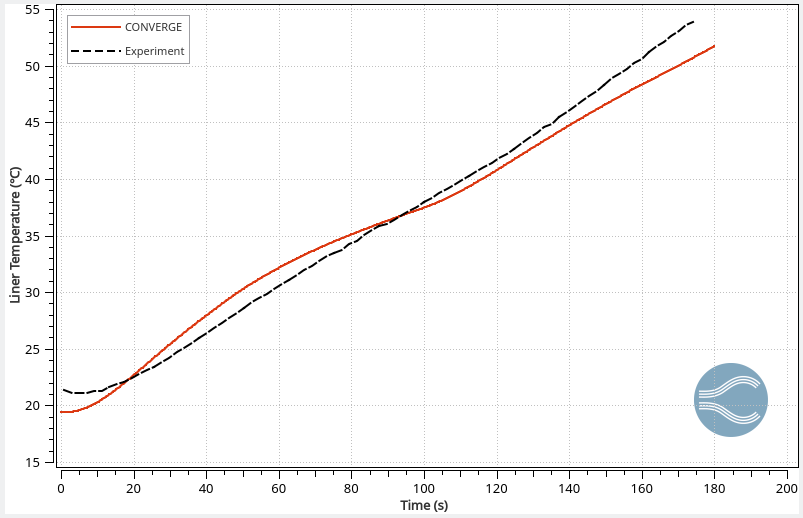

The ideal material for a hydrogen storage tank is lightweight with excellent thermal integrity and strength. CONVERGE’s CHT analysis enables engineers to assess the thermal gradients within the tank’s structure and helps in the proper selection of tank materials. Figure 3 shows the predicted temperature at the liner-composite interface compared to the experiment, demonstrating CONVERGE can also be used to capture the tank’s internal thermal behavior.

Using CONVERGE, we simulated the flow dynamics and thermal behavior during the hydrogen filling process; our results aligned well with previous experimental data.1 We assessed major flow features and identified recirculating vortical structures caused by the fluctuating behavior of the jet. CONVERGE accurately captured temperature profiles inside the tank, and its CHT capabilities predicted the liner-composite interface temperature.

The ease of use, flexibility, accuracy, and rich set of features make CONVERGE a highly effective tool for studying hydrogen tank storage. Check out our white paper, “Exploring Hydrogen Tank Filling Dynamics,” to learn more about how CONVERGE is helping engineers tackle an important challenge of the modern era!

[1] Ravinel, B., Acosta, B., Miguel, D., Moretto, P., Ortiz-Cobella, R., Janovic, G., and van der Löcht, U., “HyTransfer, D4.1 – Report on the experimental filling test campaign,” 2017.

[2] Melideo, D. and Baraldi, D., “CFD analysis of fast filling strategies for hydrogen tanks and their effects on key-parameters,” International Journal of Hydrogen Energy, 40, 735-745, 2015.

[3] Melideo, D., Baraldi, D., Acosta-Iborra, A., Cebolla, R.O., and Moretto, P., “CFD simulations of filling and emptying of hydrogen tanks,” International Journal of Hydrogen Energy, 42, 7304-7313, 2017.

[4] Gonin, R., Horgue, P., Guibert, R., Fabre, D., and Bourget, R., “A computational fluid dynamic study of the filling of a gaseous hydrogen tank under two contrasted scenarios.” International Journal of Hydrogen Energy, 47(55), 23278-23292, 2022.

Author:

Hannah Darling

Graduate Research Assistant, University of Massachusetts Amherst

As computational fluid dynamics (CFD) enthusiasts, we must sometimes take opportunities to toot our own horns when it comes to the vast capabilities of high-fidelity modeling techniques. One great opportunity appears in the floating offshore wind (OSW) industry.

Floating OSW has experienced significant growth recently and will be a key player in the global clean energy transition. Floating systems are becoming particularly favorable as they offer many advantages to their fixed-bottom or onshore counterparts. Most notably, they enable access to deeper waters with more space and higher wind potential. Floating OSW also minimizes concerns for visual, noise, and environmental impacts that on/near-shore turbines face.

Currently, there are only three operational floating OSW farms in the world—Hywind Scotland, Kincardine, and Windfloat Atlantic—but there are several others in the construction or planning phases, and many countries are making major research and development strides to further advance this technology.1

In the United States, the Floating Offshore Wind Shot outlines two key targets: to reach 15 GW of installed floating OSW capacity and to reduce the levelized cost of energy by 70%, both by 2035. According to this initiative, the U.S. has a “critical window of opportunity” to bring down technology costs and become a world leader in floating OSW design, deployment, and manufacturing. The industry will also provide significant economic benefits by producing thousands of jobs in wind manufacturing, installation, and operations, especially in coastal communities.2

However, as with many upcoming renewable technologies, there is still much work to be done to optimize the design and implementation of floating offshore wind turbines (FOWTs) to reduce life cycle costs and maximize performance before they can become widespread. One engineering solution is to use innovative modeling techniques to simulate and predict the performance of these FOWT systems prior to full-scale implementation.

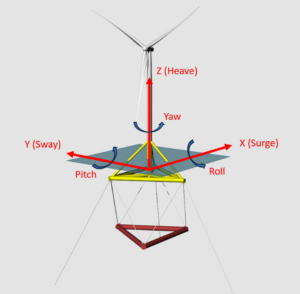

Floating OSW systems are fairly complex, consisting of a wind turbine, a floating support platform, and mooring lines anchoring it to the sea floor. Unlike fixed-bottom platforms, FOWTs face six degrees of freedom (DOF) of motion (shown in Figure 1), meaning they can translate and rotate about all three axes. Such a range of freedom, along with the varying wind and wave conditions experienced by these systems, makes load, performance, and dynamic responses difficult to predict.3 These systems also experience a type of “coupling”, where the wind loading on the turbine and wave loading on the platform affect each other. Then, when the mooring system is considered, the analysis of the overall FOWT system is complicated even further!4 To address these dynamic response challenges and better predict the behavior of these systems, there has been an increasing focus on the improvement of FOWT modeling—in particular, numerical modeling—techniques.

While FOWT designers use a wide range of numerical models to verify and predict the performance of their designs, “high-fidelity” tools like CFD are especially useful as they are capable of modeling the complex fluid-structure interactions (FSI) between the water, air, turbine, and platform (among many other benefits). CFD therefore enables researchers to perform full-scale, direct modeling of FOWT systems without the presence of scale effects (faced by physical models) or over-simplified modeling techniques (of lower-fidelity models).4

Over the past year, I have been working as a graduate research assistant in Dr. David Schmidt’s Multi-Phase Flow Simulation Laboratory at the University of Massachusetts Amherst. In collaboration with Dr. Shengbai Xie and Dr. Jasim Sadique of Convergent Science, we have been simulating a FOWT platform using CONVERGE CFD software.

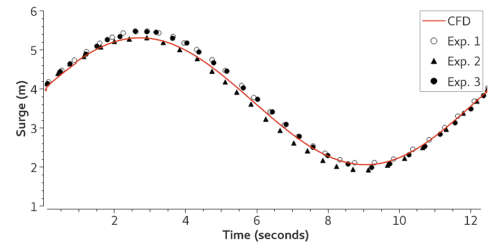

In our work, we simulate the Stiesdal TetraSpar FOWT platform5 under various environmental load conditions defined by Phase IV of the OC6 (Offshore Code Comparison Collaboration, Continued with Correlation and unCertainty) project. This project addresses a need for FOWT model verification and validation via a three-sided comparison between engineering-level, high-fidelity CFD, and experimental results. The experimental results used in the OC6 project were collected at the University of Maine on a 1:43 scale model of the TetraSpar platform2 and was the basis of our CFD comparison.

The CFD model of this FOWT system includes the platform and the moorings but excludes simulation of the wind turbine for simplicity, as Phase IV of OC6 focuses only on the hydrodynamic challenges associated with this system.2 The mooring configuration consists of three chain catenary (free-hanging) lines with fixed anchor locations, as well as a “sensor umbilical”. The sensor umbilical was a required addition in the physical model to house the sensor cables, so it was also included in the CFD model for effective comparison.

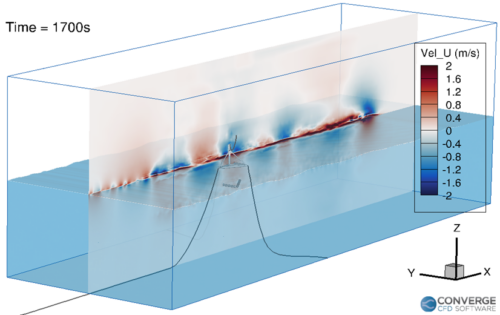

The computational domain (Figure 2) is modeled as a box in which waves are introduced at the inlet boundary, and relaxation zones exist at the inlet and outlet to gradually enforce these waves to a given condition: theoretical wave conditions at the inlet and calm water conditions at the outlet.6 The volume of fluid (VOF) method simulates the multi-phase (air/water) flow, and the moorings are dynamic lumped-mass segments including seabed interaction effects. The cut-cell Cartesian mesh models the 6 DOF FSI.